In previous article we brought up the question, and we had a conclusion:

If services need the same minimum computing resource to run,

then the performance of monolith and the performance of micro-services are the same. In this article we’ll example how it is like if the services have different minimum computing resources

TL;DR

See conclusion

Method and Assumptions

The methods and assumptions are basically the same as previous article .

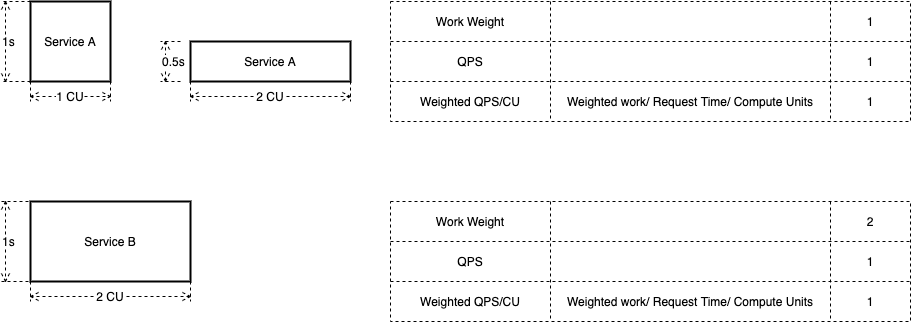

Difference is A and B here will have the same RT, but different minimum compute units (machine hardware) .

A and B run in parallel

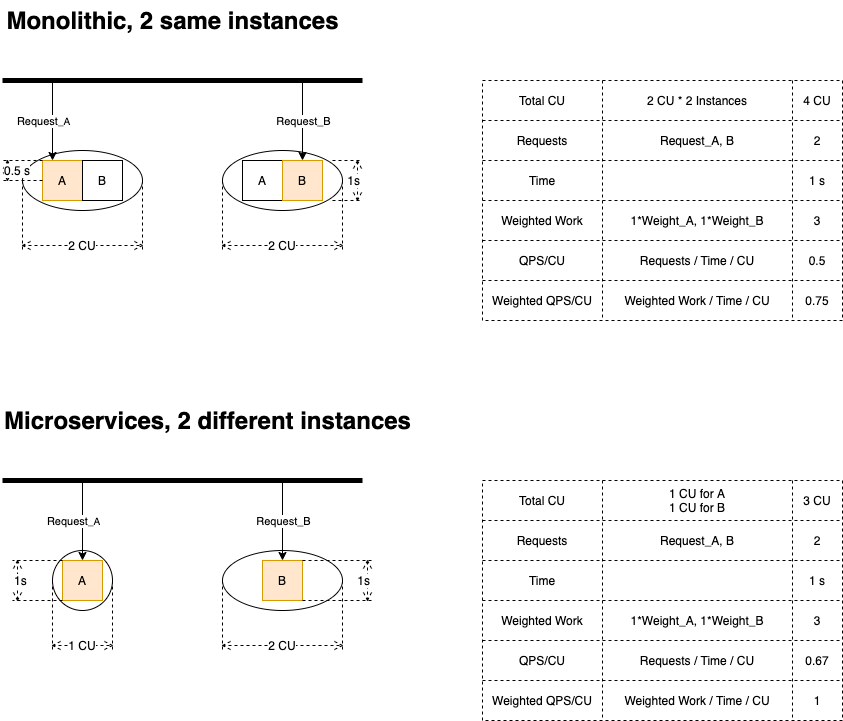

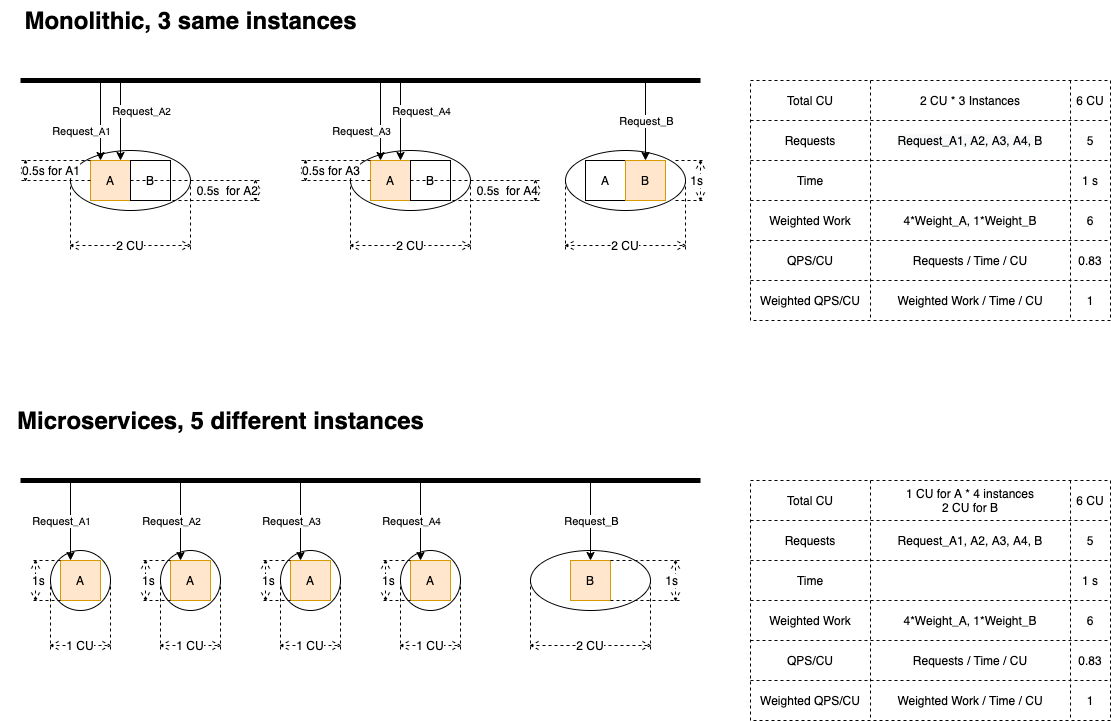

Parallel, request distribution is A:B = 1:1

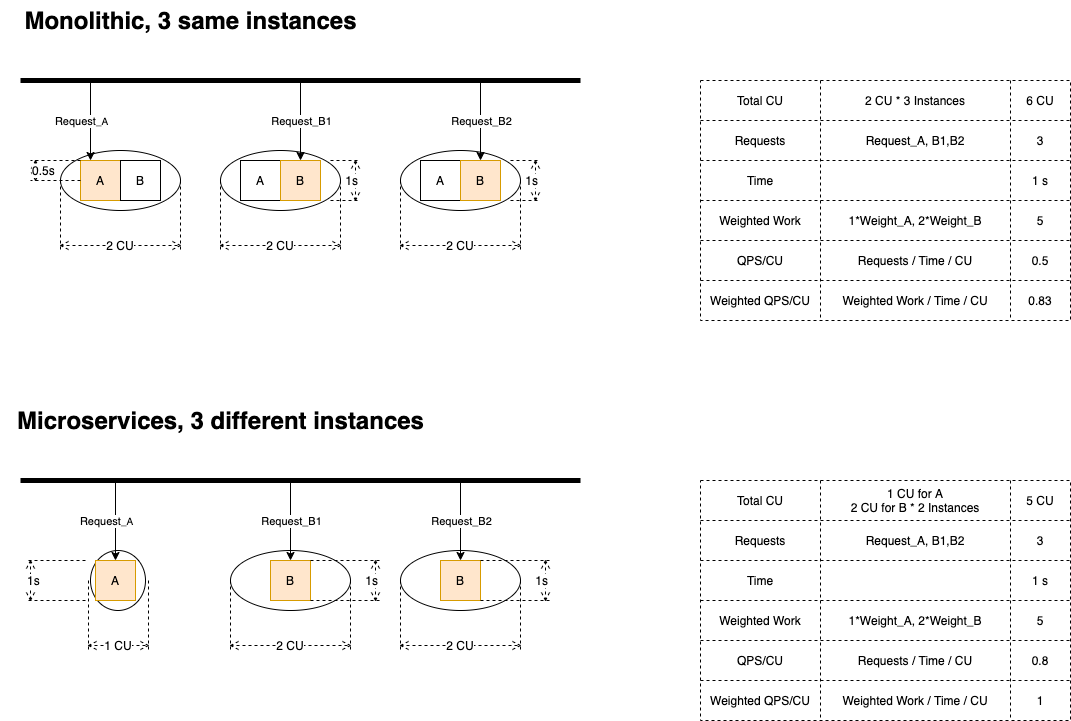

Parallel, request distribution is A:B = 1:2

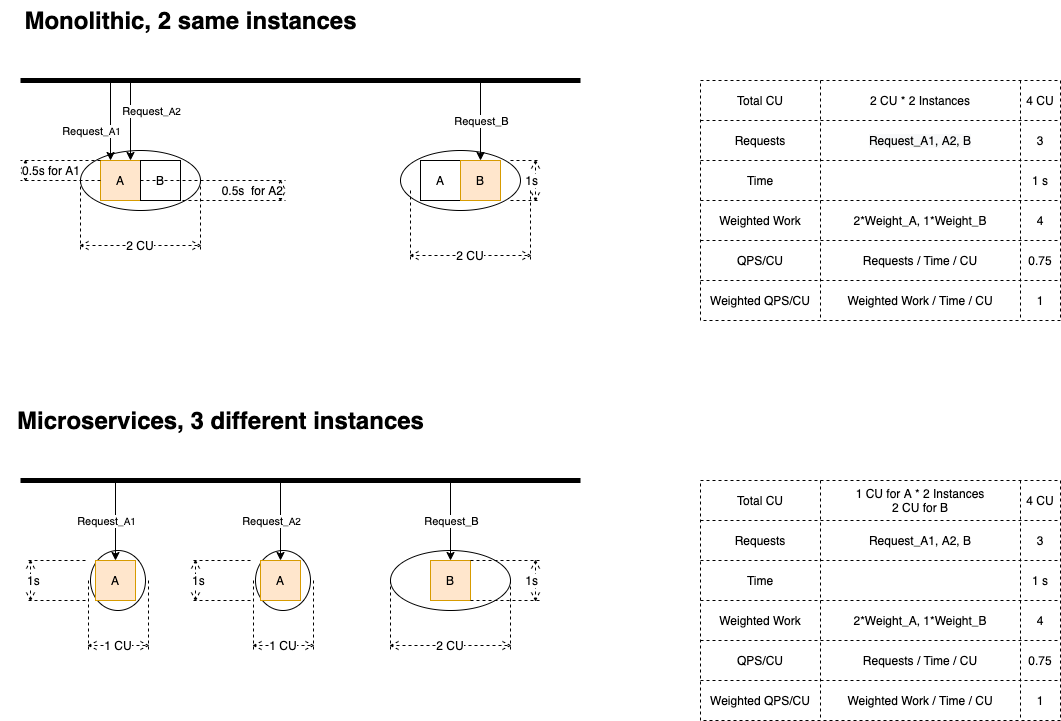

Parallel, request distribution is A:B = 2:1

Parallel, request distribution is A:B = 4:1

A summary of parallel mode

| Request distribution | Bundled or Distributed | QPS/CU | Weighted QPS/CU | Winner |

| 1:1 | Bundled | 0.5 | 0.67 | |

| Distributed | 0.33 | 1 | ✔️ | |

| 1:2 | Bundled | 0.5 | 0.83 | |

| Distributed | 0.8 | 1 | ✔️ | |

| 2:1 | Bundled | 0.75 | 1 | – |

| Distributed | 0.75 | 1 | – | |

| 4:1 | Bundled | 0.83 | 1 | – |

| Distributed | 0.83 | 1 | – |

The performance in distributed mode is better than or equal to that in bundled mode. Specifically,

- In bundled mode, if the request distribution makes you have to let some computing resource be idle some time (there is 0.5s idle in 1:1 and 1:2) while others are working, then its performance will be worse than that in distributed mode.

- Otherwise, the performance will be the same.

A and B run in series

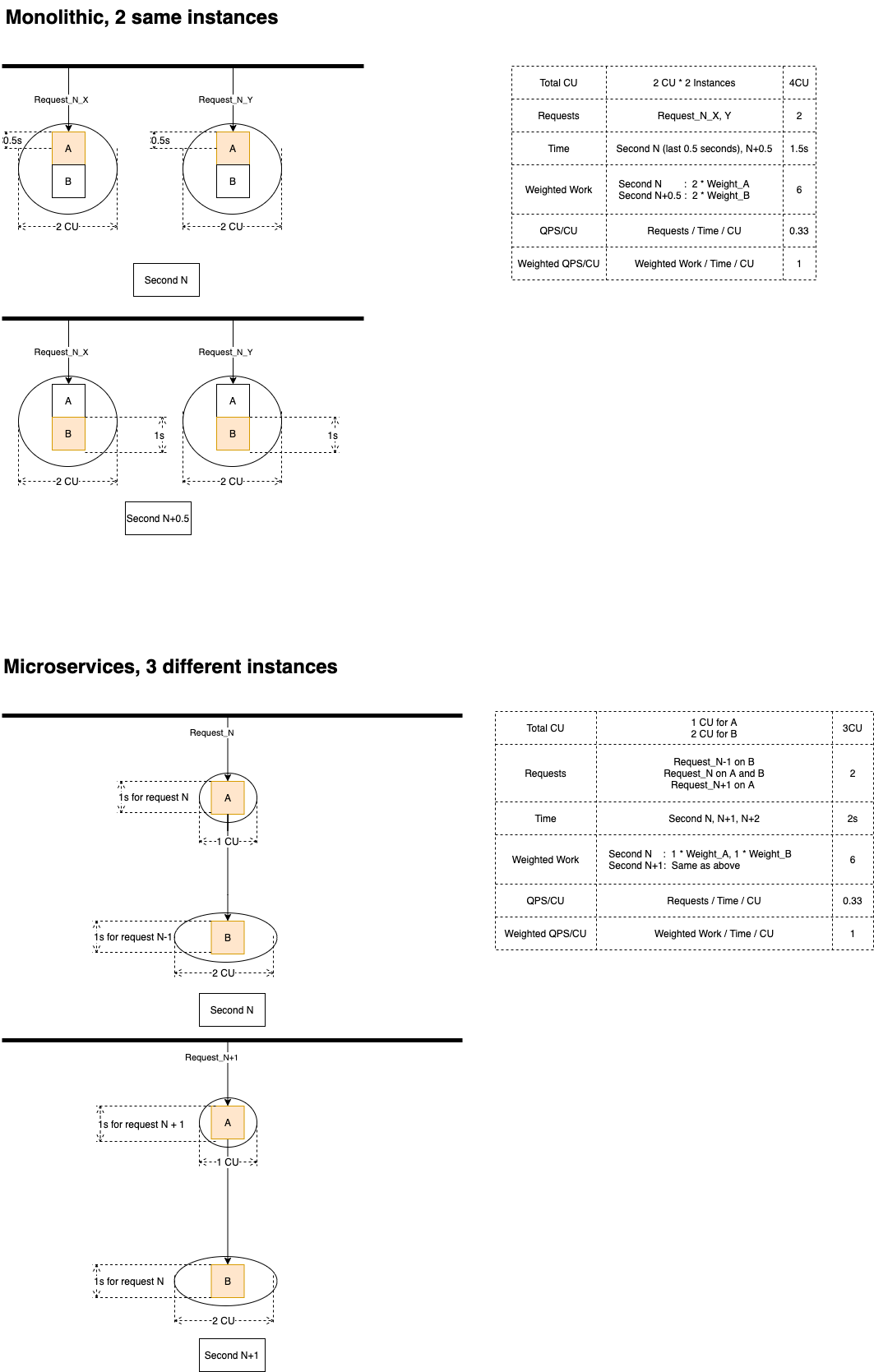

A calls B

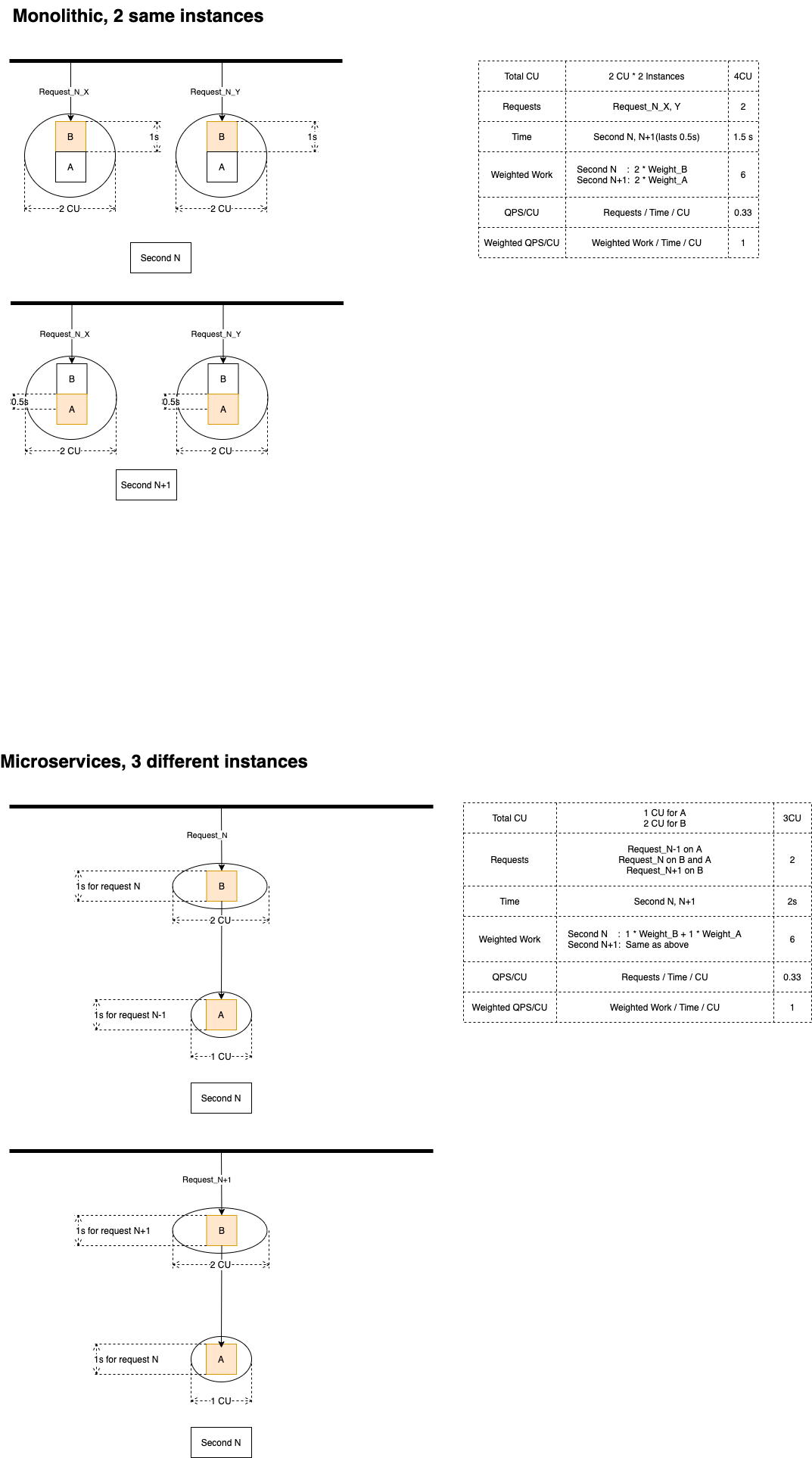

B calls A

A summary of serial mode

| Who calls who | Bundled or Distributed | QPS/CU | Weighted QPS/CU |

| Light service calls heavy service | Bundled | 0.33 | 1 |

| Distributed | 0.33 | 1 | |

| Heavy service calls light service | Bundled | 0.33 | 1 |

| Distributed | 0.33 | 1 |

The performance of bundled mode and the performance of distributed are always the same!

Why? if you check the diagrams above, you can see even in bundled mode, the compute resources are never idle.

Conclusion

If services have different minimum computation resources,

| Service topology | Performance winer | Caveat | |

| Services in parallel | Request distribution will cause some idle time regardless of instance allocation | Distributed mode | |

| Request distribution won’t cause some idle time if instance allocation is good | Draw | ||

| Services in series | In a perfect world where scaling up can lead to linear performance improvement | Draw |

The previous article and this article are using assumptions in an ideal world. For more discussions in a real word, please check part 3